Stefano Puliti

Forsker

Forfattere

Stefano Puliti Emily R. Lines Jana Müllerová Julian Frey Zoe Schindler Adrian Straker Matthew J. Allen Lukas Winiwarter Nataliia Rehush Hristina Hristova Brent Murray Kim Calders Nicholas Coops Bernhard Höfle Liam Irwin Samuli Junttila Martin Krůček Grzegorz Krok Kamil Král Shaun R. Levick Linda Luck Azim Missarov Martin Mokroš Harry J. F. Owen Krzysztof Stereńczak Timo P. Pitkänen Nicola Puletti Ninni Saarinen Chris Hopkinson Louise Terryn Chiara Torresan Enrico Tomelleri Hannah Weiser Rasmus AstrupSammendrag

Det er ikke registrert sammendrag

Forfattere

Xinlian Liang Yinrui Wang Jun Pan Janne Heiskanen Ningning Wang Siyu Wu Ilja Vuorinne Jiaojiao Tian Jonas Troles Myriam Cloutier Stefano Puliti Aishwarya Chandrasekaran James Ball Xiangcheng Mi Guochun Shen Kun Song Guofan Shao Rasmus Astrup Yunsheng Wang Petri Pellikka Mi Wang Jianya GongSammendrag

Det er ikke registrert sammendrag

Forfattere

Daniel Moreno-Fernández Johannes Breidenbach Isabel Cañellas Gherardo Chirici Giovanni D’amico Marco Ferretti Francesca Giannetti Stefano Puliti Sebastian Schnell Ross Shackleton Mitja Skudnik Iciar AlberdiSammendrag

Det er ikke registrert sammendrag

Divisjon for skog og utmark

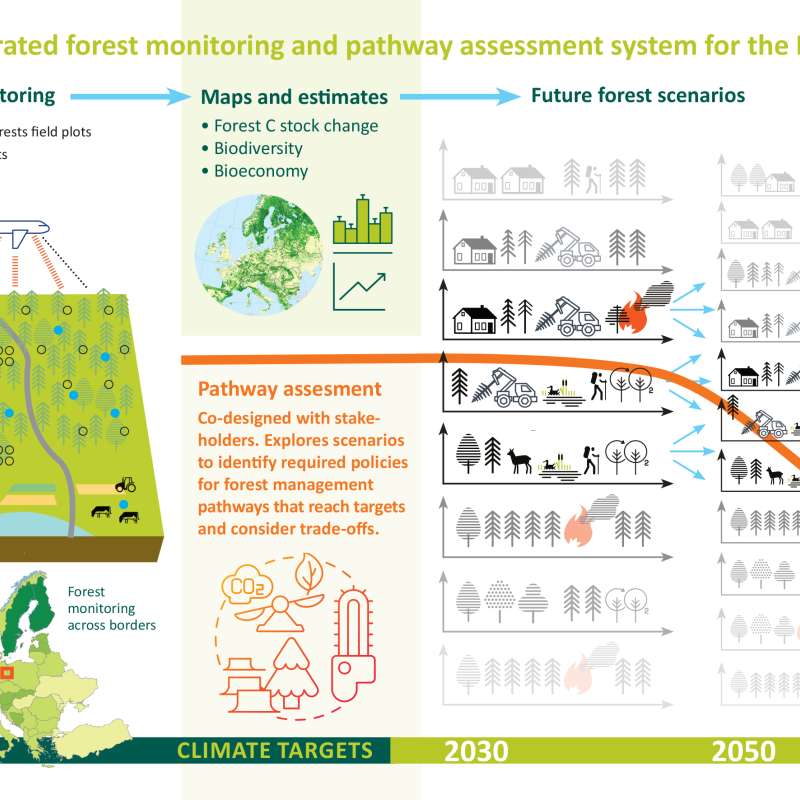

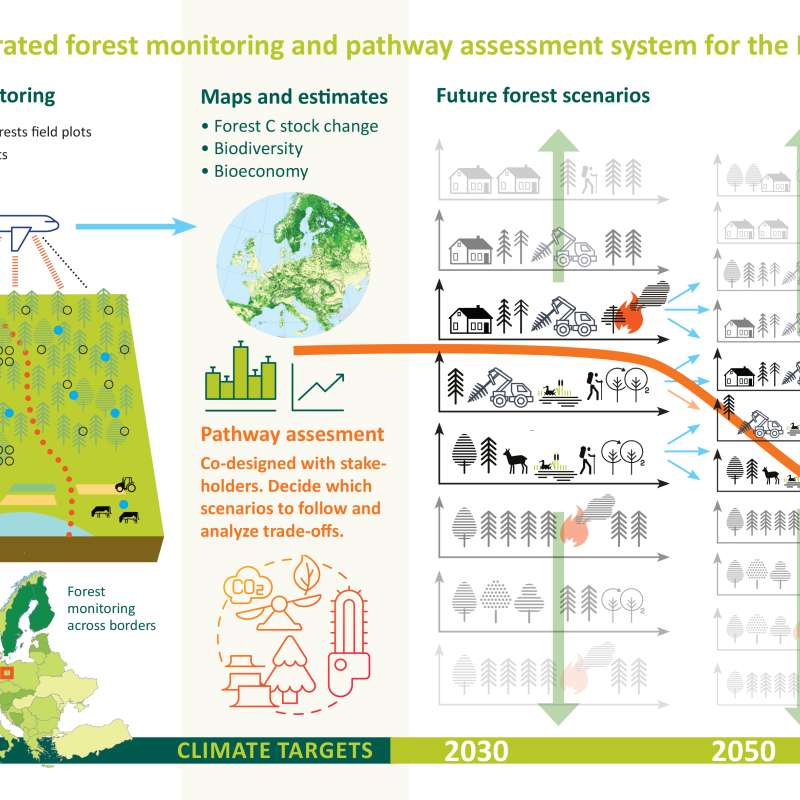

PathFinder - Towards an Integrated Consistent European LULUCF Monitoring and Policy Pathway Assessment Framework

Divisjon for skog og utmark

A Decision Support System for emerging forest management alternatives

This project aims to develop advanced tree growth models using LiDAR-derived, high-density point cloud data to improve the simulation of forest dynamics under close-to-nature silvicultural practices. By modeling tree-level growth in structurally complex and heterogeneous stands, these models will support more accurate, spatially explicit forest simulations and inform sustainable and diversified forest management decisions.

Divisjon for skog og utmark

PathFinder

Towards an Integrated Consistent European LULUCF Monitoring and Policy Pathway Assessment Framework

Divisjon for skog og utmark

SFI SmartForest: Bringing Industry 4.0 to the Norwegian forest sector

SmartForest will position the Norwegian forest sector at the forefront of digitalization resulting in large efficiency gains in the forest sector, increased production, reduced environmental impacts, and significant climate benefits. SmartForest will result in a series of innovations and be the catalyst for an internationally competitive forest-tech sector in Norway. The fundamental components for achieving this are in place; a unified and committed forest sector, a leading R&D environment, and a series of progressive data and technology companies.